Algorithmic Accountability for Learning Analytics

Update 26.11.19: An extended version of this talk is now available as a webinar)

JISC in the UK is providing the education sector with a valuable service through its Effective Learning Analytics programme. What caught my eye recently was Niall Sclater’s excellent blog with podcasts from his interviews with leading UK practitioners on the ethical dimensions to analytics.

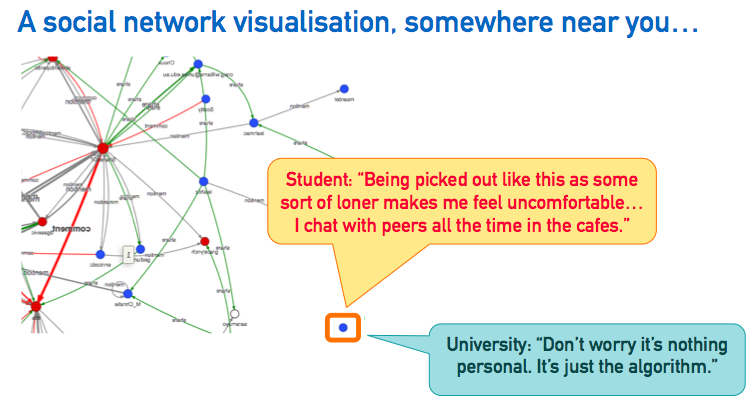

There are many insights to gain from playing these podcasts, but I was particularly tuned to any mention of making the algorithms underpinning analytics intelligible, and to whom. This cropped up a few times when the interviewees discussed to what extent students should be shown analytics, and how to explain their inner workings in a helpful way to them, the teaching staff expected to trust these new tools, and analytics researchers keen to know the inner workings of, for instance, a new analytics product. If handing over an SQL export or full LMS log aren’t considered helpful, what is the right level of detail, and summarised in what ways, for us to be “transparent”? Listening to this, it struck me that in fact this turns out to be a technology-enhanced learning design problem: how to engage non-expert audiences with very complex material to deliver quality ‘learning outcomes’? There’s a few PhDs in that. (I note in passing the Open Learner Models research strand from AIED which is now in dialogue with learning analytics).

It turns out from Niall’s interviews that students aren’t actually very curious, which is in my view a reflection of the data illiteracy in society at large. I certainly intend to make my students very curious about the analytics we run on them, but then, they’re data science students. It would seem that some vendors of predictive models are banking on customers not asking too many questions, because in my interactions with them, they have yet to develop any conception of a service to help a client tune the algorithms to their context.

Back to the JISC interviews. I see the material here, and work on the ethics of learning analytics (e.g. Pardo & Siemens 2014; Prinsloo & Slade 2015) as coming at the problem from one angle, namely ethics/legal compliance/student support/educational institutional processes. Another related but slightly different angle is to approach the problem is to ask what would it mean for a learning analytics system to be accountable to its stakeholders?

This issue is by no means restricted to learning analytics of course. Education is — as ever — slow out of the blocks compared to other sectors that have been transformed by technology. What is encouraging is that as algorithms pervade societal life, they are moving from the sorts of things that only computer scientists and mathematicians would discuss, to becoming the object of far wider academic and indeed media attention [try a web news search on algorithms]. As we (and we might ask, who is we?) delegate machines with increasing powers to make judgements about fuzzy human qualities such as ’employability’, ‘credit worthiness’, or ‘likelihood of committing a crime’, many are now asking how the behaviour of algorithms can be made more transparent and accountable. But in what senses are they opaque and to whom? What is meant by “accountable”?

The learning analytics community can learn something from our colleagues in other fields as they wrestle with these questions. I love the provocation piece for the Governing Algorithms conference, and the sparkling set of videos. Reflect on Tarleton Gillespie’s analysis of Google’s and Apple’s algorithms. Check out Paul Dourish’s recent lecture on the Social Lives of Algorithms. I learnt a lot from Solon Barocas’ tutorial on the ways that machine learning can replicate structural injustice if deployed unethically for recruitment purposes. Watch Frank Pasquale on The Promise (and Threat) of Algorithmic Accountability in the Black Box Society, and be afraid…

In a series of talks* I am test flying my thoughts as I get to grips with this work. I propose a set of lenses that we can bring to bear on a given learning analytics system to define “accountability” at multiple levels from multiple angles. It turns out that algorithmic accountability may be the wrong focus — or rather, just one piece in the jigsaw puzzle. Intriguingly, even if you can look inside the algorithmic ‘black box’, which is imagined to lie in the system’s code, there may be little of use there. I suggest that a human-centred informatics approach is an appropriate one to embrace when considering “the system” wholistically, where the aggregate quality we are after might be dubbed Analytic System Integrity. I conclude by working through a couple of worked examples from current projects as a form of ‘Analytic System Integrity audit’, to show where one can identify weaknesses.

May 6 update: The following replay is from a talk at the UCL Institute of Education (Knowledge Lab) joint with UCL Interaction Centre. It is v2 of the talk, updating the one I posted earlier from University of South Australia Digital Learning Week.

* My thanks to colleagues for hosting these events: Kirsty Kitto (Queensland University of Technology, Institute for Future Environments), Shane Dawson (University of South Australia, Digital Learning Week), UCL (Manolis Mavrikis), and The Open University (Rebecca Ferguson).

This topic is being discussed as part of LASI-Denmark 2016: https://groups.google.com/forum/#!topic/learninganalytics/Yt2siQnlYtY

These slides were updated for a half-day training session at SoLAR LASI 2017 Michigan:

https://www.slideshare.net/sbs/black-box-learning-analytics-beyond-algorithmic-transparency

Mar 25th, 2016 at 11:11 pm

Algorithmic Accountability for #LearningAnalytics? Here’s my #dlw2016 replay + thoughts on @sclater interviews https://t.co/FQjWr8I92j

Mar 25th, 2016 at 11:27 pm

RT @sbuckshum: Algorithmic Accountability for #LearningAnalytics? Here’s my #dlw2016 replay + thoughts on @sclater interviews https://t.co…

Mar 26th, 2016 at 1:21 am

RT @sbuckshum: Algorithmic Accountability for #LearningAnalytics? Here’s my #dlw2016 replay + thoughts on @sclater interviews https://t.co…

Mar 26th, 2016 at 2:32 am

RT @sbuckshum: Algorithmic Accountability for #LearningAnalytics? Here’s my #dlw2016 replay + thoughts on @sclater interviews https://t.co…

Mar 26th, 2016 at 6:42 am

RT @sbuckshum: Algorithmic Accountability for #LearningAnalytics? Here’s my #dlw2016 replay + thoughts on @sclater interviews https://t.co…

Mar 26th, 2016 at 6:55 am

RT @sbuckshum: Algorithmic Accountability for #LearningAnalytics? Here’s my #dlw2016 replay + thoughts on @sclater interviews https://t.co…

Mar 26th, 2016 at 8:30 am

RT @sbuckshum: Algorithmic Accountability for #LearningAnalytics? Here’s my #dlw2016 replay + thoughts on @sclater interviews https://t.co…

Mar 26th, 2016 at 8:57 am

RT @sbuckshum: Algorithmic Accountability for #LearningAnalytics? Here’s my #dlw2016 replay + thoughts on @sclater interviews https://t.co…

Mar 26th, 2016 at 10:12 am

RT @sbuckshum: Algorithmic Accountability for #LearningAnalytics? Here’s my #dlw2016 replay + thoughts on @sclater interviews https://t.co…

Mar 26th, 2016 at 12:00 pm

[…] 1Algorithmic Accountability for Learning Analytics? […]

Mar 26th, 2016 at 6:26 pm

RT @sbuckshum: Algorithmic Accountability for #LearningAnalytics? Here’s my #dlw2016 replay + thoughts on @sclater interviews https://t.co…

May 31st, 2020 at 2:37 am

[…] be held accountable for the student’s failure? Williamson (2016) refers to the work of Simon Buckingham Shum around the question of algorithmic accountability within learning analytics as warranting […]