PhD Scholarship: Teamwork Analytics for Evidence-Based Reasoning

Announcing a 3 year scholarship, AUD$35,000/year, starting July 2020

Teamwork Analytics for Evidence-Based Reasoning

Supervision Team

Simon Buckingham Shum (UTS:CIC) and Tim van Gelder (U. Melbourne)

This project is a collaboration between UTS:CIC and the University of Melbourne SWARM Project. The successful candidate will be based in Sydney, also spending time in Melbourne.

Visit the CIC PhD Scholarships page for full details. Please email us to express interest, ask any questions, and if we can see a potential fit we’ll advise you on writing your proposal.

The Challenge

The real world challenge: improving collaborative EBR

The challenges facing society are so complex that multiple expertises are needed. Consider security, science, law, health, policy-making, finance. The teamis the ubiquitous organisational unit, but the quality of its reasoning, especially under pressure, can vary dramatically. Problems are not provided in neat, well-defined packages: a team must frame problems in creative ways that lead to insights, and resolve uncertainties around possible responses, making the best possible use of evidence, plus their own judgement. Studies of how teams engage in such “sensemaking” highlight the blinkers that can blindside teams, and how the ways that the problem is expressed and visually represented can help or hinder (Weick, 1995). We will term this whole process Evidence-Based Reasoning (EBR). (We note of course that politics and social dynamics are unavoidable whenever people come together, and effective team members learn how to navigate these dynamics effectively.)

Improving collaborative EBR is an interesting scientific and design challenge. A successful support system (i.e. ways of working + enabling tools) must respect the principles of good reasoning, as determined by fields such as logic, argumentation and epistemology, and the domain-specific knowledge (i.e. emergency response, engineering, social work, counter-intelligence, etc.). At the same time, it must accommodate the strengths, weaknesses and vagaries of human reasoners, which is the terrain of cognitive and social psychologists. If part of the support system is interactive software, then it must have a good user interface and a solid underlying architecture. Assessing the resulting performance is a difficult evaluation problem. Building such systems is therefore inherently multidisciplinary.

How do we better equip teams for collaborative EBR? From an educational perspective, teamwork, problem solving and critical thinking skills are now among the most in demand ‘transferable competencies’ (Fiore et al 2018). The challenge of assessing and equipping graduates in these is at the heart of the learning and teaching strategies at UTS and U. Melbourne.

The technology support challenge:

While in some fields, there are specialist tools for modelling and simulation that assist analysts by managing constraints in the problem, but even with machine intelligence, the agency typically rests with the human analysts to decide how much weight to give to the machine’s output. Most other fields, however, do not have such tools: collaborative EBR is typically supported by general-purpose information technologies such as word processors, spreadsheets, databases, and project planners to help with managing information and producing reports. Similarly, generic communication tools dominate, such as email, chat, video conferencing, phone. In most cases, the reasoning itself is typically left wholly to the human reasoners themselves.

There have been remarkably few attempts to provide direct technological support for the processes of inference and judgement that are at the heart of collaborative EBR, and moreover, those attempts have had little impact on the way it is actually conducted in most places (van Gelder, 2012). There are methods and software tools for facilitating group processes and visualising team reasoning, but these require quite an advanced facilitation and software skillset (e.g. Culmsee and Awati, 2013; Okada, et al., 2008; Selvin et al, 2012).

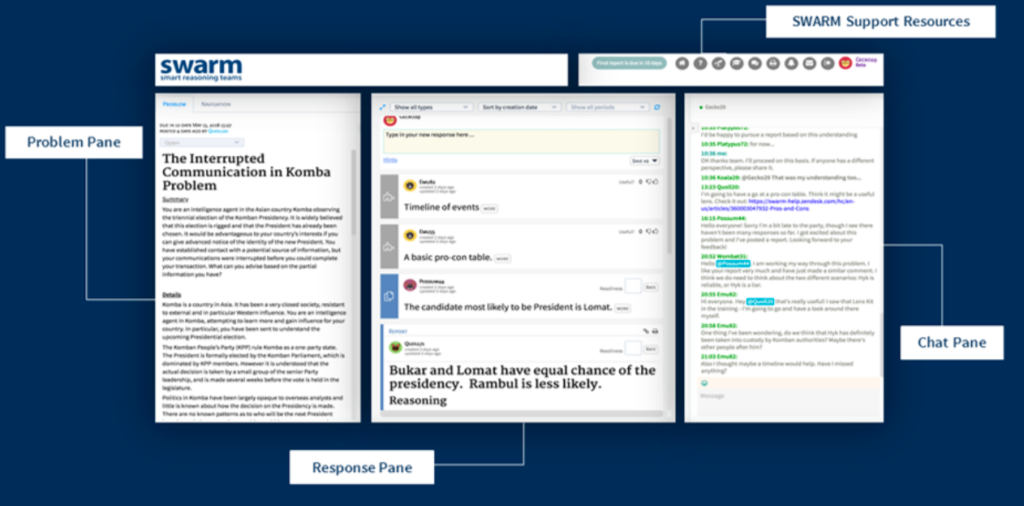

Our interest is in developing computer-support to improve the collaborative EBR of geographically and often temporally distributed teams, that does not require specialist skills to start using beyond using what are now familiar collaboration tools. SWARM is an online platform emerging from an ongoing research project to improve the kind of collaborative EBR undertaken by intelligence analysts making sense of complex sets of qualitative and quantitative information or varying reliability. However, these are the conditions under which most other domains operate, and we hypothesise that it has broader potential, and specifically in this project, for education and training. SWARM is based on three design principles: cultivating user engagement, exploiting natural expertise, and supporting rich collaboration (van Gelder et al, 2018). Central to its approach is the upskilling of team members to equip them with different EBR skills (see in particular the Lens Kit).

Figure: The SWARM workspace

Recent large scale empirical evaluations, in which teams of analysts tackled complex challenges with or without SWARM, indicated that the quality of the reports produced by SWARM teams was significantly better than reports produced by analysts using normal methods (van Gelder et al, In Prep). In a follow-up project, “super-teams” on the platform produced reasoning so good it would plausibly be called “super-reasoning” (van Gelder & de Rozario, 2017) analogous to “super-forecasting” (Tetlock & Gardner, 2015).

This CIC seminar is a great introduction to the work so far:

Learning Analytics for SWARM

The encouraging evidence of SWARM’s effectiveness makes it an attractive candidate platform for use in educational/training contexts. While evaluation of final reports (i.e. the team’s product) is a conventional measure of team performance, and certainly one that educators will be interested in, this is not the only possible indicator of improvement. The emergence of data science, activity-based analytics and visualisation opens new possibilities for tracking the process that teams are following. Learning Analytics connects such techniques to what is known about the teaching and learning of teamwork, and could make the assessment of team performance more rigorous, and more cost effective.

This PhD is therefore focusing on inventing and validating new forms of automated team analytics for collaborative EBR, to provide insights into both process and product. Such analytics might enable not only coaches and researchers to gain insights into a team’s effectiveness, but the teams themselves to monitor their work in real time, or critically review their project on completion. Further, real-time analytics can be used to shape the collaborative environment itself, resulting in better collaboration and better outputs. Some prototype analytics have already been developed to summarise participants’ contributions and interactions. This PhD will build on this work, synthesise the literature, plus insights from the SWARM team and educators, in order to define, design, implement and evaluate automated analytics in different contexts, spanning education and training, research, and potentially more authentic deployments with professional teams.

Figure: Early version of the SWARM group dynamics dashboard. Upper diagrams shows levels of interaction among team members working on a particular problem.

Relevant analytics techniques include, but are not limited to:

- Text analysis to identify significant contributions to the team communications and the report they are producing

- Social network analysis to identify significant interaction patterns among team members

- Process mining to identify significant sequences in the actions that individuals engage in, within or between sessions

- Statistical techniques to identify significant differences between teams

Candidates

In addition to the broad skills and dispositions that we are seeking in all candidates (see CIC’s PhD homepage), you should have:

- A Masters degree, Honours distinction or equivalent with at least above-average grades in computer science, mathematics, statistics, or equivalent

- Analytical, creative and innovative approach to solving problems

- Strong interest in designing and conducting quantitative, qualitative or mixed-method studies

- Strong programming skills in at least one relevant language (e.g. R, Python)

- Experience with web log analysis, statistics and/or data science tools.

It is advantageous if you can evidence:

- Design and Implementation of user-centred software, especially data/information visualisations

- Skill in working with non-technical clients to involve them in the design and testing of software tools

- Knowledge and experience of natural language processing/text analytics

- Familiarity with the scholarship in a relevant areas (e.g. high performance teams; collective intelligence; collaborative problem solving)

- Peer-reviewed publications

Interested candidates should contact the team to open a conversation: Simon.BuckinghamShum@uts.edu.au; tgelder@unimelb.edu.au

We will discuss your ideas with you to help sharpen up your proposal, which will be competing with others for a scholarship. Please follow the application procedure for the submission of your proposal.

References

Culmsee, P. and Awati, K. (2013). The Heretics Guide to Best Practices: The Reality of Managing Complex Problems in Organisations. iUniverse.

Fiore, S. M., Graesser, A., & Greiff, S. (2018). Collaborative problem-solving education for the twenty-first-century workforce. Nature Human Behaviour, 2(6), 367–369.

Okada, A., Buckingham Shum, S. and Sherborne, T. (Eds.) (2008). Knowledge Cartography: Software Toolsand Mapping Techniques. London, UK: Springer. (Second Edition 2014)

Selvin, A. M., Buckingham Shum, S.J.and Aakhus, M. (2010). The practice level in participatory design rationale: studying practitioner moves and choices. Human Technology: An Interdisciplinary Journal of Humans in ICT Environments, 6(1), pp. 71–105.

Tetlock, P., & Gardner, D. (2015). Superforecasting: The Art and Science of Prediction.London: Random House.

van Gelder, T.J. (2012). Cultivating Deliberation for Democracy. Journal of Public Deliberation. 8 (1), Article 12.

van Gelder, T., & de Rozario, R. (2017). Pursuing Fundamental Advances in Human Reasoning. In T. Everitt, B. Goertzel, & A. Potapov (Eds.), Artificial General Intelligence(Vol. 10414, pp. 259–262). Cham: Springer International Publishing.

Tim van Gelder, Richard De Rozario, and Richard O. Sinnott (2018). SWARM: Cultivating Evidence Based Reasoning. Computing in Science & Engineering. Downloadable from http://bit.ly/cultivatingEBR

van Gelder, T. J. et al (in preparation). Prospects for a Fundamental Advance in Analytical Reasoning.

Weick, K. (1995). Sensemaking in Organizations. Thousand Oaks, CA, USA: Sage.