Grounded Prompt Engineering for researchers

This year has been one of rapid learning for us, regarding the use of large language models, not to power educational chatbots to promote deeper learning (also of great interest), but as textual analysis tools for deductive coding — a task that until recently was really beyond machines.

In this paper we document some progress in the research led by doctoral researcher Ram Ramanathan, co-supervised with Lisa Lim, with prompt engineering expertise from Naz Rezazadeh Mottaghi.

Ram’s PhD is focused on Belonging Analytics — the use of data, analytics and AI to understand university students’ sense of belonging. He’ll present this in a few weeks at the 15th International Learning Analytics and Knowledge Conference (LAK25)

Sriram Ramanathan, Lisa-Angelique Lim, Nazanin Rezazadeh Mottaghi, and Simon Buckingham Shum. 2025. When the Prompt becomes the Codebook: Grounded Prompt Engineering (GROPROE) and its application to Belonging Analytics. In LAK25: The 15th International Learning Analytics and Knowledge Conference (LAK 2025), March 03–07, 2025, Dublin, Ireland. (ACM, New York, NY, USA, 13 pages). https://doi.org/10.1145/3706468.3706564 [Eprint]

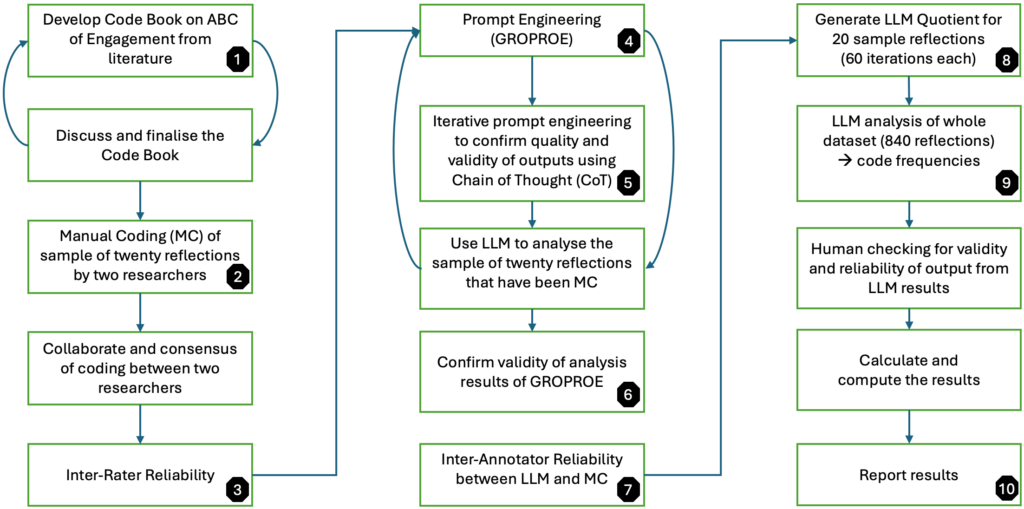

Abstract: With the emergence of generative AI, the field of Learning Analytics (LA) has increasingly embraced the use of Large Language Models (LLMs) to automate qualitative analysis. Deductive analysis requires theoretical or other conceptual grounding to inform coding. However, few studies detail the process of translating the literature into a codebook, and then into an effective LLM prompt. In this paper, we introduce Grounded Prompt Engineering (GROPROE) as a systematic process to develop a literature-grounded prompt for deductive analysis. We demonstrate our GROPROE process on a dataset of 860 written reflections, coding for students’ affective engagement and sense of belonging. To evaluate the quality of the coding we demonstrate substantial human/LLM Inter-Annotator Reliability (IAR). To evaluate the consistency of LLM coding, a subset of the data was analysed 60 times using the LLM Quotient showing how this stabilized for most codes. We discuss the dynamics of human-AI interaction when following GROPROE, foregrounding how the prompt took over as the iteratively revised codebook, and how the LLM provoked codebook revision. The contributions to the LA field are threefold: (i) GROPROE as a systematic prompt-design process for deductive coding grounded in literature, (ii) a detailed worked example showing its application to Belonging Analytics, and (iii) implications for human-AI interaction in automated deductive analysis.