Tackling cognitive bias with CHI tools

CHI is the premier conference on Computer-Human Interaction, though this year’s isn’t meeting due to COVID-19 (but see the Proceedings if you’re not familiar with its amazing breadth and depth). However, some of the workshops are going ahead online, one of which is this new one on Detection and Design for Cognitive Biases in People & Computing Systems:

“With social computing systems and algorithms having been shown to give rise to unintended consequences, one of the suspected success criteria is their ability to integrate and utilize people’s inherent cognitive biases. Biases can be present in users, systems and their contents. With HCI being at the forefront of designing and developing user-facing computing systems, we bear special responsibility for increasing awareness of potential issues and working on solutions to mitigate problems arising from both intentional and unintentional effects of cognitive biases.

This workshop brings together designers, developers, and thinkers across disciplines to re-define computing systems by focusing on inherent biases in people and systems and work towards a research agenda to mitigate their effects. By focusing on cognitive biases from a content or system as well as from a human perspective, this workshop will sketch out blueprints for systems that contribute to advancing technology and media literacy, building critical thinking skills, and depolarization by design.”

The papers are all open access, so jump in and see what an interesting range of contributions this event attracted. The workshop was an eclectic mix of expertises and interests, and also used a Miro board in a fun way to support activities with sticky note exercises.

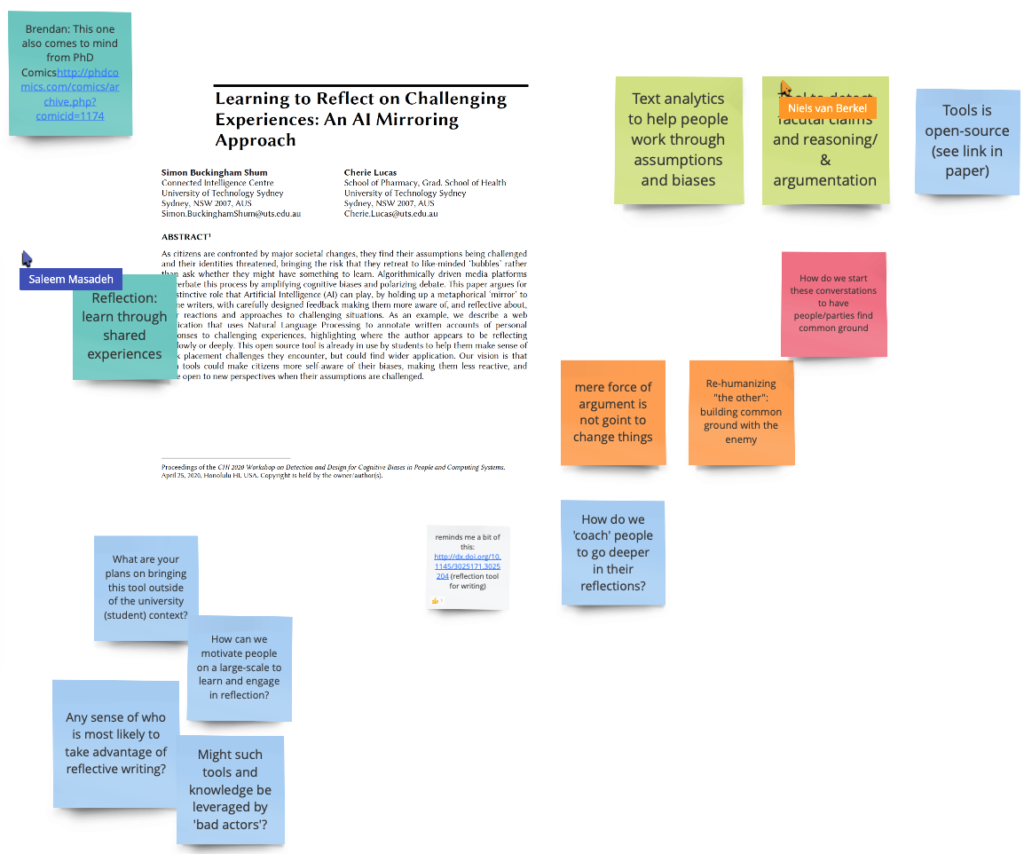

I wrote a position paper with Cherie Lucas, contextualising our work on the automated detection of written reflection. Our vision is that such tools could make citizens more self-aware of their biases, making them less reactive, and more open to new perspectives when their assumptions are challenged.

Buckingham Shum, S. and Lucas, C. (2020). Learning to Reflect on Challenging Experiences: An AI Mirroring Approach. Proceedings of the CHI 2020 Workshop on Detection and Design for Cognitive Biases in People and Computing Systems, April 25, 2020. [slides]

Abstract. As citizens are confronted by major societal changes, they find their assumptions being challenged and their identities threatened, bringing the risk that they retreat to like-minded ‘bubbles’ rather than ask whether they might have something to learn. Algorithmically driven media platforms exacerbate this process by amplifying cognitive biases and polarizing debate. This paper argues for a distinctive role that Artificial Intelligence (AI) can play, by holding up a metaphorical ‘mirror’ to online writers, with carefully designed feedback making them more aware of, and reflective about, their reactions and approaches to challenging situations. As an example, we describe a web application that uses Natural Language Processing to annotate written accounts of personal responses to challenging experiences, highlighting where the author appears to be reflecting shallowly or deeply. This open source tool is already in use by students to help them make sense of work placement challenges they encounter, but could find wider application. Our vision is that such tools could make citizens more self-aware of their biases, making them less reactive, and more open to new perspectives when their assumptions are challenged.

Leave a Reply